Arducam ToF Camera SDK – for Jetson

About SDK

A software development kit (SDK) is a collection of software development tools in one installable package. They facilitate the creation of applications by having a compiler, debugger and sometimes a software framework. They are normally specific to a hardware platform and operating system combination.

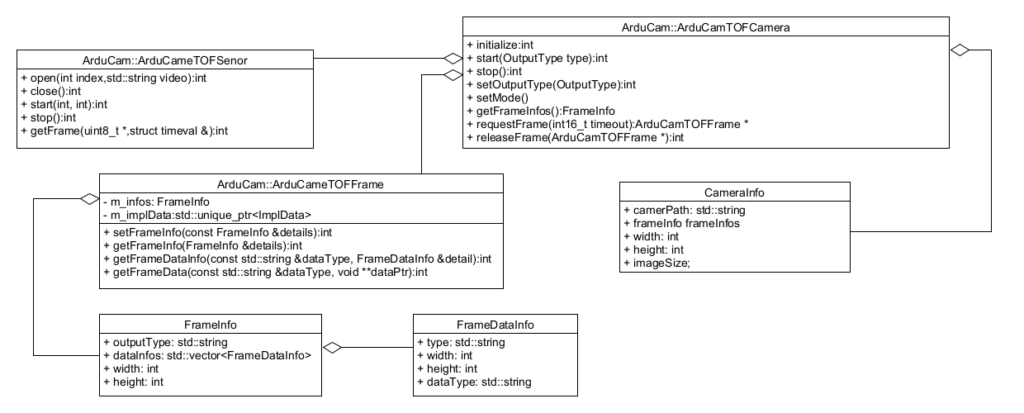

Software Stack of Arducam ToF Camera

ArduCam TOF SDK is a dynamic link library written in C++, There are three classes, Camera, Frame, and Sensor. Camera is responsible for managing camera image acquisition calculation, Frame is responsible for memory management of camera data storage, and Sensor is the specific implementation of calling the camera. It is called as a member object in the Camera class, and users generally do not touch it.

Using Arducam ToF Camera SDK

SDK Installation

The SDK will be installed together with the camera driver and necessary dependencies when you complete running the following steps. It is the same set of commands as Step1,2,3 instructed in

Follow each of the steps by running their respective command shown below.

Step 1. Pull the repository.

git clone https://github.com/ArduCAM/Arducam_tof_camera.git

Step 2. Change the directory to Arducam_tof_camera

cd Arducam_tof_camera

Step 3. Installation (Driver, Dependencies, SDK, OpenCV)

./Install_dependencies_jetson.sh

When you see the reboot prompt, enter y.

Running Examples

Examples with C

SDK workflow

- create a camera instance.

- start the camera.

- request a frame.

- get frame data

- release frame space

- stop the camera

Demo

#include "ArduCamDepthCamera.h"

#include <stdlib.h>

#include <stdio.h>

void getPreview(uint8_t *preview_ptr, float *phase_image_ptr, float *amplitude_image_ptr)

{

unsigned long int len = 240 * 180;

for (unsigned long int i = 0; i < len; i++)

{

uint8_t amplitude = *(amplitude_image_ptr + i) > 30 ? 254 : 0;

float phase = ((1 - (*(phase_image_ptr + i) / 2)) * 255);

uint8_t depth = phase > 255 ? 255 : phase;

*(preview_ptr + i) = depth & amplitude;

}

}

int main()

{

ArducamDepthCamera tof = createArducamDepthCamera();

FrameBuffer frame;

if (init(tof,CSI,DEPTH_TYPE,0))

exit(-1);

if (start(tof))

exit(-1);

uint8_t *preview_ptr = malloc(180*240*sizeof(uint8_t)) ;

float* depth_ptr = 0;

int16_t *raw_ptr = 0;

float *amplitude_ptr = 0;

FrameFormat format;

if ((frame = requestFrame(tof,200)) != 0x00){

format = getFormat(frame,DEPTH_FRAME);

}

for (;;)

{

if ((frame = requestFrame(tof,200)) != 0x00)

{

depth_ptr = (float*)getDepthData(frame);

printf("Center distance:%.2f.\n",depth_ptr[21600]);

amplitude_ptr = (float*)getAmplitudeData(frame);

getPreview(preview_ptr,depth_ptr,amplitude_ptr);

releaseFrame(tof,frame);

}

}

if (stop(tof))

exit(-1);

return 0;

}

Content in CMakeList.txt

cmake_minimum_required(VERSION 3.4)

SET(CMAKE_BUILD_TYPE "Debug")

SET(CMAKE_CXX_FLAGS_DEBUG "$ENV{CXXFLAGS} -O0 -Wall -g2 -ggdb")

project(example_tof)

include_directories(BEFORE /usr/include/c /usr/include/cpp)

add_executable( example_tof your_filename.c )

target_link_libraries( example_tof ArducamDepthCamera_c pthread)

Compile & Run

mkdir build && cd build

cmake ..

make

Results

Examples with C++

*SDK workflow*

- create a camera instance.

- initialize camera instance.

- start the camera.

- request a frame.

- get frame data

- release frame space

- stop the camera

Demo

#include "ArduCamTOFCamera.hpp"

#include <opencv2/core.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include <chrono>

#include <iostream>

// MAX_DISTANCE value modifiable is 2 or 4

#define MAX_DISTANCE 4

void display_fps(void)

{

static int count = 0;

++count;

static std::chrono::high_resolution_clock::time_point time_beg = std::chrono::high_resolution_clock::now();

std::chrono::high_resolution_clock::time_point time_end = std::chrono::high_resolution_clock::now();

std::chrono::duration<double, std::ratio<1, 1>> duration_s = time_end - time_beg;

if (duration_s.count() >= 1)

{

std::cout << "fps:" << count << std::endl;

count = 0;

time_beg = time_end;

}

}

void getPreview(uint8_t *preview_ptr, float *phase_image_ptr, float *amplitude_image_ptr)

{

auto len = 240 * 180;

for (auto i = 0; i < len; i++)

{

uint8_t mask = *(amplitude_image_ptr + i) > 30 ? 254 : 0;

float depth = ((1 - (*(phase_image_ptr + i) / MAX_DISTANCE)) * 255);

uint8_t pixel = depth > 255 ? 255 : depth;

*(preview_ptr + i) = pixel & mask;

}

}

cv::Rect seletRect(0, 0, 0, 0);

cv::Rect followRect(0, 0, 0, 0);

void onMouse(int event, int x, int y, int flags, void *param)

{

if (x < 4 || x > 251 || y < 4 || y > 251)

return;

switch (event)

{

case cv::EVENT_LBUTTONDOWN:

break;

case cv::EVENT_LBUTTONUP:

seletRect.x = x - 4 ? x - 4 : 0;

seletRect.y = y - 4 ? y - 4 : 0;

seletRect.width = 8;

seletRect.height = 8;

break;

default:

followRect.x = x - 4 ? x - 4 : 0;

followRect.y = y - 4 ? y - 4 : 0;

followRect.width = 8;

followRect.height = 8;

break;

}

}

int main()

{

ArduCam::ArduCamTOFCamera tof;

ArduCam::FrameBuffer *frame;

if (tof.init(ArduCam::CSI,ArduCam::DEPTH_TYPE)){

std::cerr<<"initialization failed"<<std::endl;

exit(-1);

}

if (tof.start()){

std::cerr<<"Failed to start camera"<<std::endl;

exit(-1);

}

// Modify the range also to modify the MAX_DISTANCE

tof.setControl(ArduCam::RANGE,MAX_DISTANCE);

ArduCam::CameraInfo tofFormat = tof.getCameraInfo();

float *depth_ptr;

float *amplitude_ptr;

uint8_t *preview_ptr = new uint8_t[tofFormat.height * tofFormat.width];

cv::namedWindow("preview", cv::WINDOW_AUTOSIZE);

cv::setMouseCallback("preview", onMouse);

for (;;)

{

frame = tof.requestFrame(200);

if (frame != nullptr)

{

depth_ptr = (float *)frame->getData(ArduCam::DEPTH_FRAME);

amplitude_ptr = (float *)frame->getData(ArduCam::AMPLITUDE_FRAME);

getPreview(preview_ptr, depth_ptr, amplitude_ptr);

cv::Mat result_frame(tofFormat.height, tofFormat.width, CV_8U, preview_ptr);

cv::Mat depth_frame(tofFormat.height, tofFormat.width, CV_32F, depth_ptr);

cv::Mat amplitude_frame(tofFormat.height, tofFormat.width, CV_32F, amplitude_ptr);

cv::applyColorMap(result_frame, result_frame, cv::COLORMAP_JET);

amplitude_frame.convertTo(amplitude_frame, CV_8U,255.0/1024,0);

cv::imshow("amplitude", amplitude_frame);

cv::rectangle(result_frame, seletRect, cv::Scalar(0, 0, 0), 2);

cv::rectangle(result_frame, followRect, cv::Scalar(255, 255, 255), 1);

std::cout << "select Rect distance: " << cv::mean(depth_frame(seletRect)).val[0] << std::endl;

cv::imshow("preview", result_frame);

if (cv::waitKey(1) == 27)

break;

display_fps();

}

tof.releaseFrame(frame);

}

if (tof.stop())

exit(-1);

return 0;

}

Content in CMakeList.txt

cmake_minimum_required(VERSION 3.4)

SET(CMAKE_BUILD_TYPE "Debug")

SET(CMAKE_CXX_FLAGS_DEBUG "$ENV{CXXFLAGS} -O0 -Wall -g2 -ggdb")

project(example_tof)

include_directories(BEFORE /usr/include/c /usr/include/cpp)

find_package( OpenCV REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

add_executable(example_tof your_filename.cpp )

target_link_libraries( example_tof ArducamDepthCamera ${OpenCV_LIBS} )

Compile & Run

mkdir build && cd build

cmake ..

make

Results

Example with Python

SDK workflow

- create a camera instance.

- initialize camera instance.

- start the camera.

- request a frame.

- get frame data

- release frame space

- stop the camera

Demo

import sys

import cv2

import numpy as np

import ArduCamDepthCamera as ac

dir(ac)

def process_frame(depth_buf: np.ndarray, amplitude_buf: np.ndarray) -> np.ndarray:

depth_buf = np.nan_to_num(depth_buf)

amplitude_buf[amplitude_buf<=30] = 0

amplitude_buf[amplitude_buf>30] = 255

depth_buf = (1 - (depth_buf/2)) * 255

depth_buf = np.clip(depth_buf, 0, 255)

result_frame = depth_buf.astype(np.uint8) & amplitude_buf.astype(np.uint8)

return result_frame

selectRect_x1 = 0

selectRect_y1 = 0

selectRect_x2 = 0

selectRect_y2 = 0

followRect_x1 = 0

followRect_y1 = 0

followRect_x2 = 0

followRect_y2 = 0

def on_mouse(event, x, y, flags, param):

global followRect_x1,followRect_y1,followRect_x2,followRect_y2

global selectRect_x1,selectRect_y1,selectRect_x2,selectRect_y2

# if (x < 4 or x > 251 or y < 4 or y > 251):

# return

if event == cv2.EVENT_LBUTTONDOWN:

pass

elif event == cv2.EVENT_LBUTTONUP:

selectRect_x1 = x - 4 if x - 4 > 0 else 0

selectRect_y1 = y - 4 if y - 4 > 0 else 0

selectRect_x2 = x + 4 if x + 4 < 240 else 240

selectRect_y2= y + 4 if y + 4 < 180 else 180

else:

followRect_x1 = x - 4 if x - 4 > 0 else 0

followRect_y1 = y - 4 if y - 4 > 0 else 0

followRect_x2 = x + 4 if x + 4 < 240 else 240

followRect_y2 = y + 4 if y + 4 < 180 else 180

if __name__ == "__main__":

cam = ac.ArducamCamera()

if cam.init(ac.TOFConnect.CSI,ac.TOFOutput.DEPTH,0) != 0 :

print("initialization failed")

if cam.start() != 0 :

print("Failed to start camera")

cv2.namedWindow("preview", cv2.WINDOW_AUTOSIZE)

cv2.setMouseCallback("preview",on_mouse)

while True:

frame = cam.requestFrame(200)

if frame != None:

depth_buf = frame.getDepthData()

amplitude_buf = frame.getAmplitudeData()

amplitude_buf[amplitude_buf<0] = 0

amplitude_buf[amplitude_buf>255] = 255

cam.releaseFrame(frame)

cv2.imshow("preview_amplitude", amplitude_buf.astype(np.uint8))

result_image = process_frame(depth_buf,amplitude_buf)

result_image = cv2.applyColorMap(result_image, cv2.COLORMAP_JET)

cv2.rectangle(result_image,(selectRect_x1,selectRect_y1),(selectRect_x2,selectRect_y2),(128,128,128), 1)

cv2.rectangle(result_image,(followRect_x1,followRect_y1),(followRect_x2,followRect_y2),(255,255,255), 1)

print("select Rect distance:",np.mean(depth_buf[selectRect_x1:selectRect_x2,selectRect_y1:selectRect_y2]))

cv2.imshow("preview",result_image)

key = cv2.waitKey(1)

if key == ord("q"):

exit_ = True

cam.stop()

sys.exit(0)

Run

python3 your_filename.py

Results (to be updated soon)

API References for C

You can find C references here.

API References for C++

You can find C++ references here.